Introduction

Human movement is a form of non-verbal communication which conveys information about its performer. Analyzing and understanding human movement using computational methods is being actively researched in the fields such as human-computer interaction, computer animation, and sports and health research. Raw movement data provide little information about the the movement. One needs to extract and represent a set of spatial, temporal, and qualitative characteristics from the raw movement data in order to interpret and draw conclusions about the movement. Visual representation systems then can be used to integrate and convey the characteristics of each movement to novice and expert human observers.

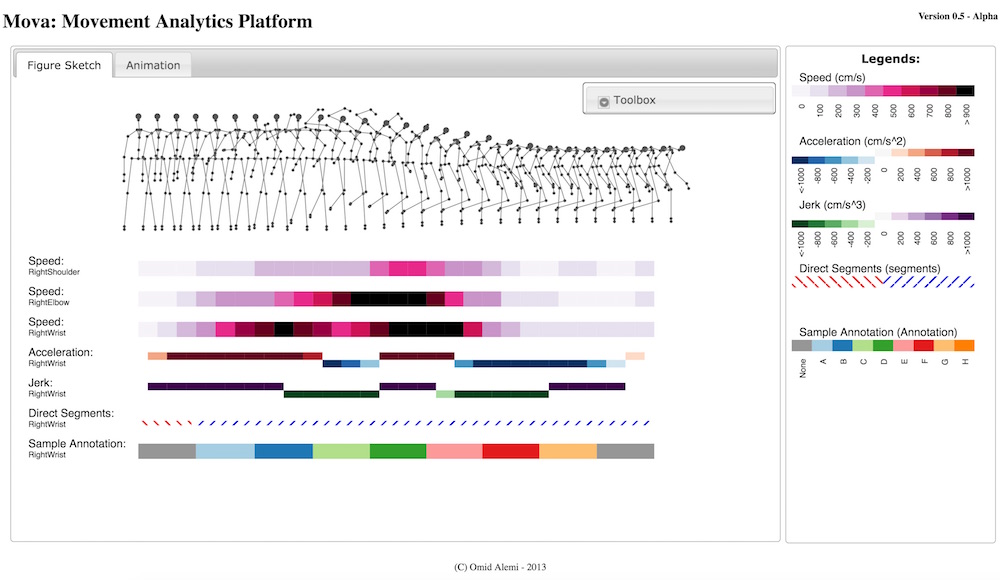

Mova is an interactive movement analytics platform which integrates an extensible set of feature extraction methods with a visualization engine within an interactive environment. Using Mova, you can visually analyze the movements of a human actor. For example, evaluate the performance of a student dancer, or look for movement abnormalities in a patient. Mova is a multi-modal data visualization tool. It provides a platform for integrating and visualizing human movement and its characteristics from a variety of data sources or representations. For example video records, motion capture, and physiological data of the same movement can be analyzed and visualized altogether. Furthermore, Mova can be used as a research tool that can facilitate development and evaluation of movement feature extraction techniques as well as movement visualizations.

Implementation

Mova is implemented as an open-source web application and is executed inside a web browser. A prototype of Mova is currently available here. With this prototype you can choose a sample movement, add features, and visualize the data. You can also watch a tutorial video here.

Mova is also the defult visualization tool for the open source movement database MoDa: http://moda.movingstories.ca.

Road Map

Future developments of Mova includes:

- Better support for combining multi-modal movement data such as video, mocap, accelerometers, breath sensors, and physiological sensors

- a rich library of the state of the art movement feature extraction methods from the literature

- feature extraction and visualization on-line scripting

- automatic key-frame extraction

- the support for features that involve a group of joints

- the support for comparing the features of two or more movement clips

- the support for inserting annotations to a movement clip

- more variations of visualization techniques

Publication

Omid Alemi, Philippe Pasquier, and Chris Shaw. 2014. Mova: Interactive Movement Analytics Platform. In Proceedings of the 2014 International Workshop on Movement and Computing (MOCO '14), Pages 37-42. DOI