Introduction

AffectNet captures and controls the valence and arousal qualities of movement patterns using a single-layer Factored, Conditional Restricted Boltzmann Machine (FCRBM). We train the FCRBM with a corpus of affect-expressive motion capture data of two actors, performing various movements, each time modulated based a different arousal and valence levels. The agents are then able to control the emotional qualities of their movements through the FCRBM for any given combination of the valence and arousal, beyond those values that exist in the trainig data.

Affective Motion Graph Data

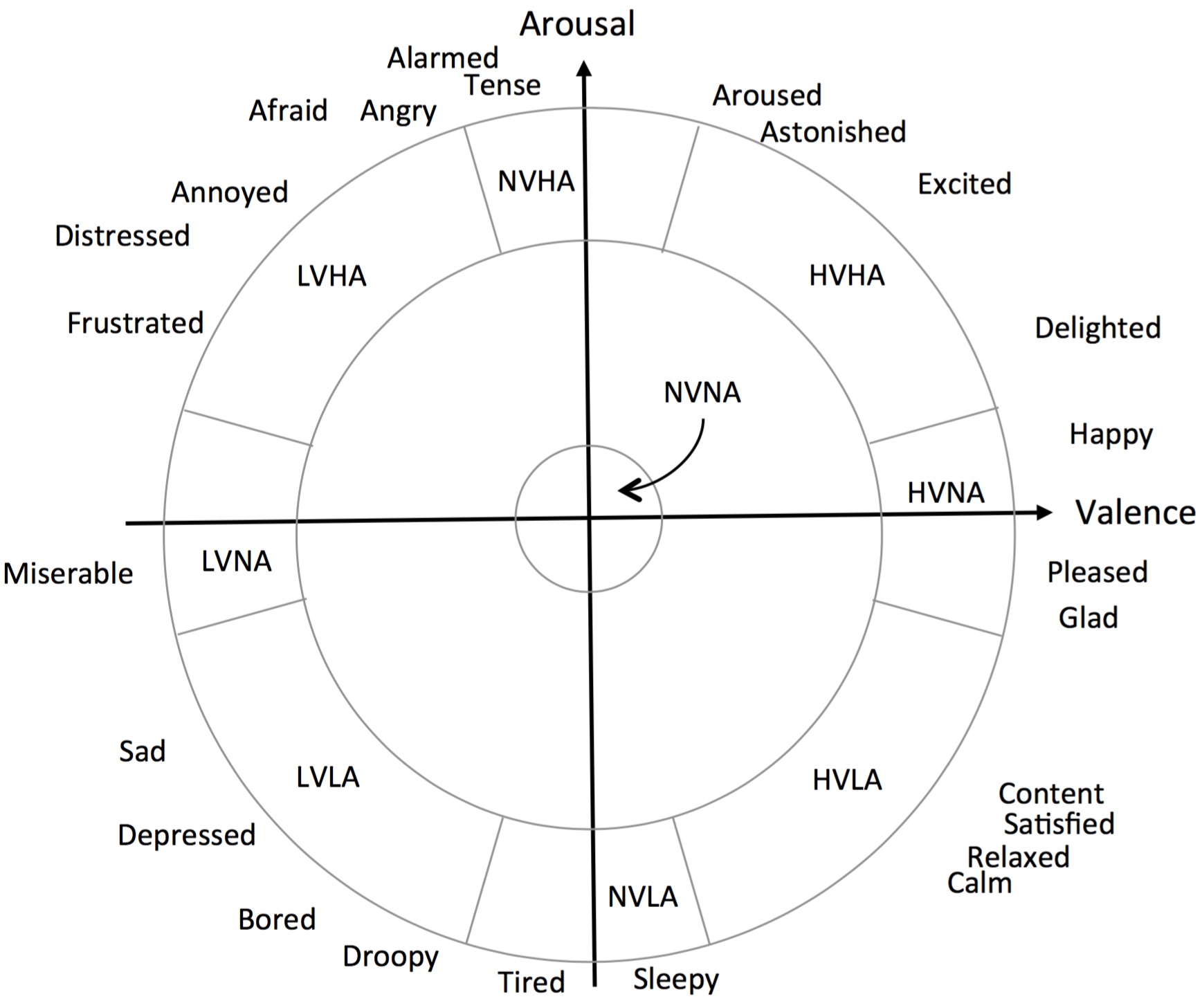

We use valence and arousal dimensions to describe the affective state of movements, as they define the affective state with two degrees of freedom, each across a continuum. The continuum makes it more suitable for learning a generalized model of the affect than the categorical models and allows us to create smooth transitions that are essential for interactive applications.

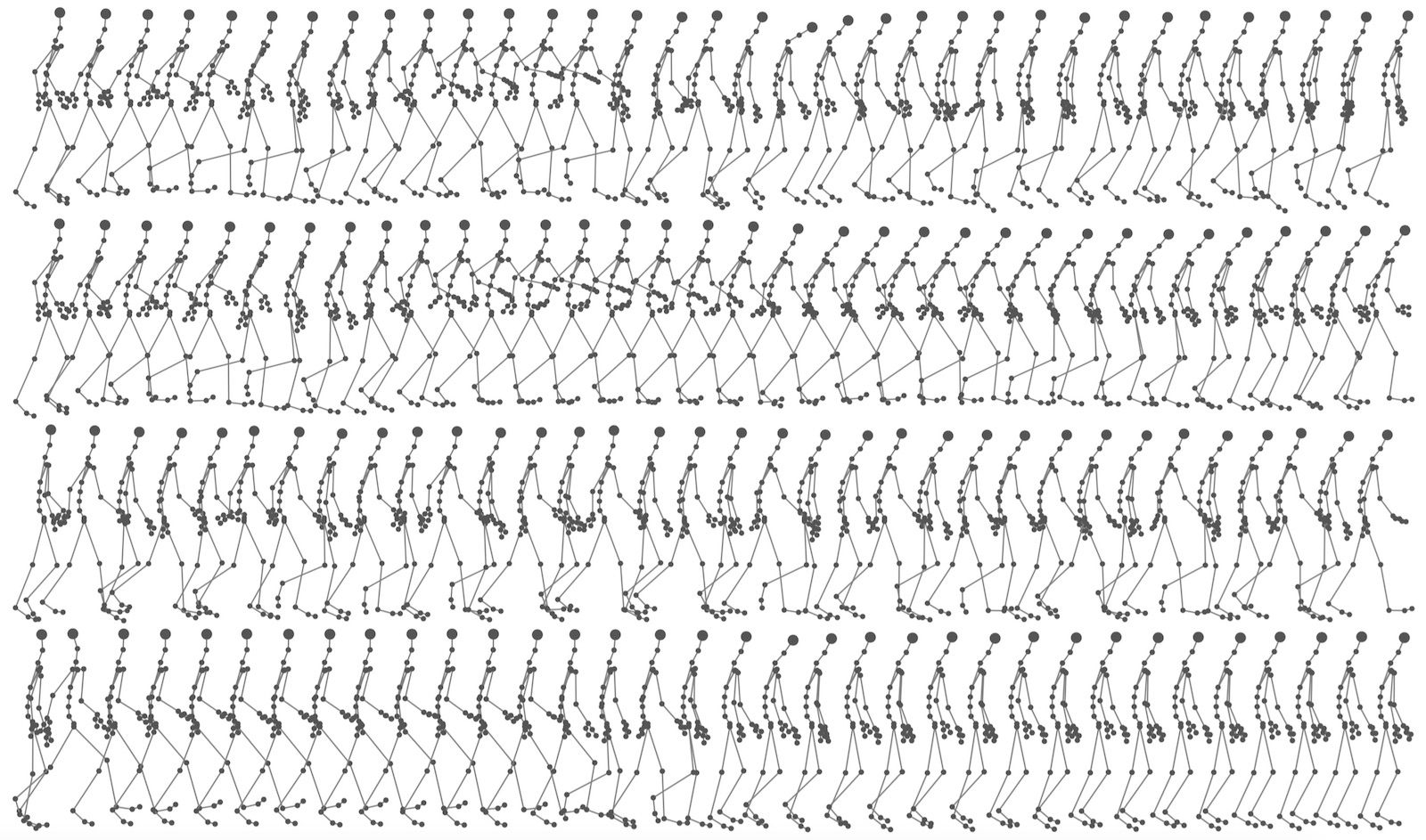

We use the Affective Motion Graph data set for training this system. Each movement sequence is performed in 9 different expressive modulations, as indicated in the figure. Low, neutral, and high levels of valence and arousal are considered. Each modulation of the emotions is expressed by full body movements through mainly the body posture (its shape), the body parts’ effort changes, and occasional arm gestures. Each modulation was also repeated four times to increase the variability of the training data. All of the recorded motion capture data and the reference videos are available at our MoDa Repository.

The Model

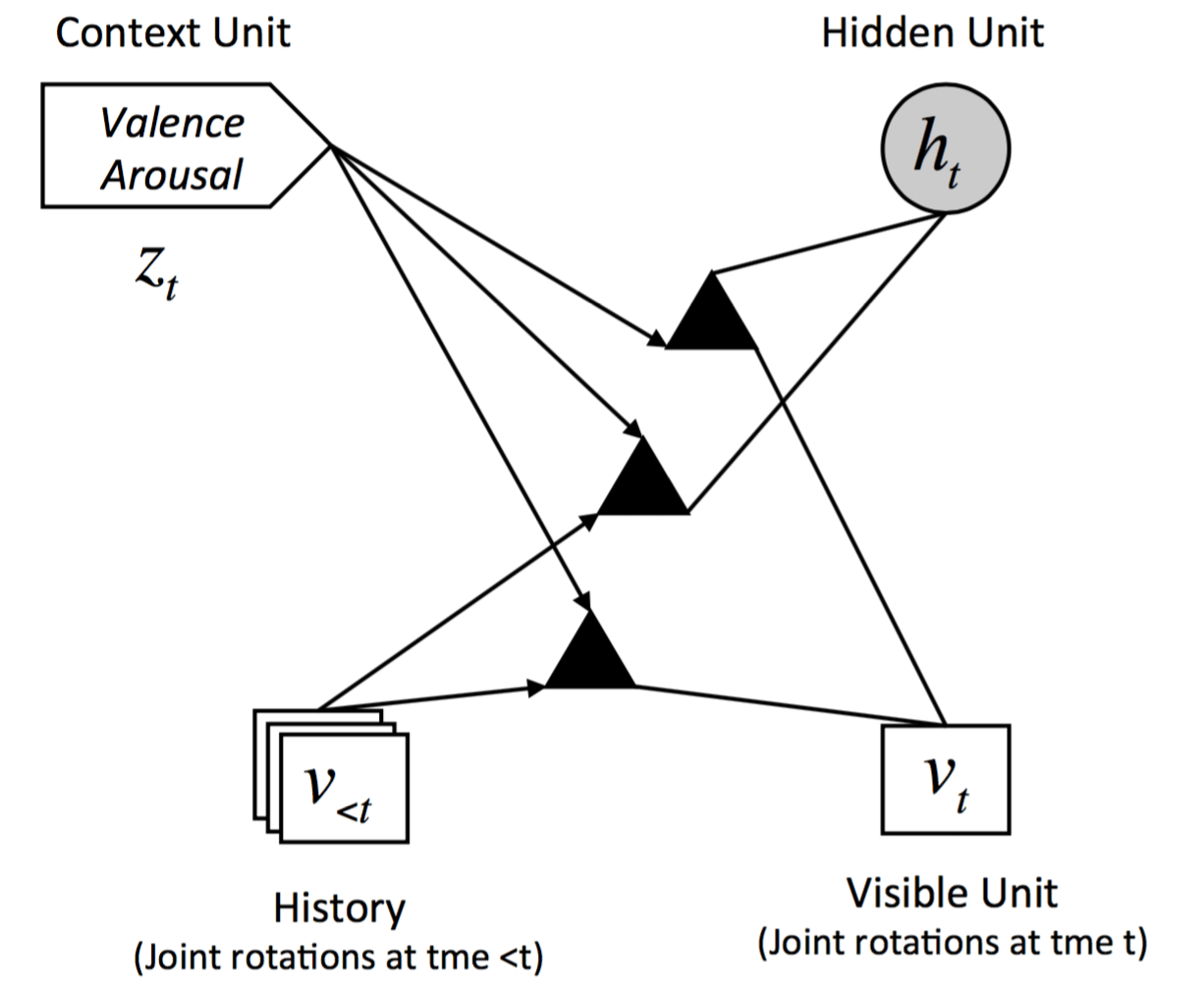

We use Factored Conditional Restricted Boltzmann Machines for modeling the expressive qualities of movement.

Results

Publication

Alemi, Omid, Li, William, and Pasquier, Philippe. 2015. Affect-expressive movement generation with factored conditional Restricted Boltzmann Machines. IEEE, 442–448. DOI